The second week (well fortnight really) of the 11.133x MOOC moved us on to developing some resources that will help us to evaluate education technology and make a selection.

Because I’m applying this to an actual project (two birds with one stone and all) at work, it took me a little longer than I’d hoped but I’m still keeping up. It was actually fairly enlightening because the tool that I had assumed we would end up using wasn’t the one that was shown to be the most appropriate for our needs. I was also able to develop a set of resources (and the start of a really horribly messy flowchart) that my team will be able to use for evaluating technology down the road.

I’m just going to copy/paste the posts that I made in the MOOC – with the tools – as I think they explain what I did better than glibly trying to rehash it here on the fly.

Four tools for identifying and evaluating educational technology

I’ve been caught up with work things this week so it’s taken me a little while to get back to this assignment but I’m glad as it has enabled me to see the approaches that other people have been taken and clarify my ideas a little.

My biggest challenge is that I started this MOOC with a fairly specific Ed Tech project in mind – identifying the best option in student lecture instant response systems. The assignment however asks us to consider tools that might support evaluating Ed Tech in broader terms and I can definitely see the value in this as well. This has started me thinking that there are actually several stages in this process that would probably be best supported by very different tools.

One thing that I have noticed (and disagreed with) in the approaches that some people have taken has been that the tools seem to begin with the assumption that the type of technology is selected and then the educational /pedagogical strengths of this tool are assessed. This seems completely backwards to me as I would argue that we need to look at the educational need first and then try to map it to a type of technology.

In my case, the need/problem is that student engagement in lectures is low and a possible solution is that the lecturer/teacher would like to get better feedback about how much the students are understanding in real time so that she can adjust the content/delivery if needed.

Matching the educational need to the right tool

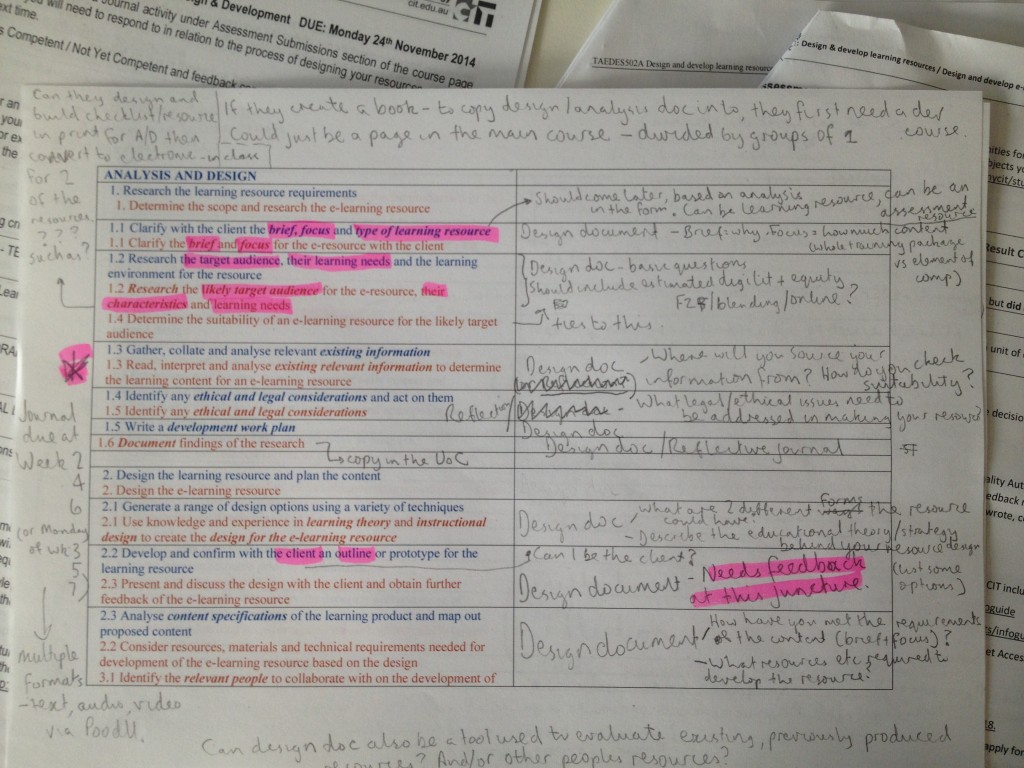

When I started working on this I thought that this process required three separate steps – a flowchart to point to suitable types of technology, a checklist to see whether it would be suitable and then a rubric to compare products.

As I developed these, I realised that we also need to clearly identify the teacher’s educational needs for the technology, so I have add a short survey about this here, at the beginning of this stage.

I also think that a flowchart (ideally interactive) could be a helpful tool in this stage of identifying technology. (There is a link to the beginning of the flowchart below)

I have been working on a model that covers 6 key areas of teaching and learning activity that I think could act as the starting point for this flowchart but I recognise that such a tool would require a huge amount of work so I have just started with an example of how this might look. (Given that I have already identified the type of tool that I’m looking at for my project, I’m going to focus more on the tool to select the specific application)

I also recognise that even for my scenario, the starting point could be Communication or Reflection/Feedback, so this could be a very messy and large tool.

The key activities of teaching/learning are:

• Sharing content

• Communication

• Managing students

• Assessment tasks

• Practical activities

• Reflection / Feedback

I have created a Padlet at http://padlet.com/gamerlearner/edTechFlowchart and a LucidChart athttps://www.lucidchart.com/invitations/accept/6645af78-85fd-4dcd-92fe-998149cf68b2 if you are interested in sharing ideas for types of tools, questions or feel like helping me to build this flowchart.

I haven’t built many flowcharts (as my example surely demonstrates) but I think that if it was possible to remove irrelevant options by clicking on sections, this could be achievable.

Is the technology worthwhile?

The second phase of this evaluation lets us look more closely at the features of a type of technology to determine whether it is worth pursuing. I would say that there are general criteria that will apply to any type of technology and there would also need to be specific criteria for the use case. (E.g. for my lecture clicker use case, it will need to support 350+ users – not all platforms/apps will do this but as long as some can, it should be considered suitable)

Within this there are also essential criteria and nice-to-have criteria. If a tool can’t meet the essential criteria then it isn’t fit for purpose, so I would say that a simple checklist should be sufficient as a tool will either meet a need or it won’t. (This stage may require some research and understanding of the available options first). This stage should also make it possible to compare different types of platforms/tools that could address the same educational needs. (In my case, for example, providing physical hardware based “clickers” vs using mobile/web based apps)

This checklist should address general needs which I have broken down by student, teacher and organisational needs that could be applied to any educational need. It should also include scenario specific criteria.

Evaluating products

It’s hard to know exactly what the quality of the tool or the learning experiences will be. We need to make assumptions based on the information that is available. I would recommend some initial testing wherever possible.

I’m not convinced that it is possible to determine the quality of the learning outcomes from using the tool so I have excluded these from the rubric.

Some of the criteria could be applied to any educational technology and some are specifically relevant to the student response / clicker tool that I am investigating.

Lecture Response System pitch

This was slightly rushed but it does reflect the results of the actual evaluation that I have carried out into this technology so far. (I’m still waiting to have some questions answered from one of the products)

I have emphasised the learning needs that we identified, looked quickly at the key factors in the evaluation and then presented the main selling points of the particular tool. From there I would encourage the teacher/lecturer to speak further to me about the finer details of the tool and our implementation plan.

Any thoughts or feedback on this would be most welcome.

Edit: I’ve realised that I missed some of the questions – well kind of.

The biggest challenge will be how our network copes with 350+ people trying to connect to something at once. The internet and phone texting options were one of the appealing parts about the tool in this regard, as it will hopefully reduce this number.

Awesome would look like large numbers of responses to poll questions and the lecturer being able to adjust their teaching style – either re-explaining a concept or moving to a new one – based on the student responses.

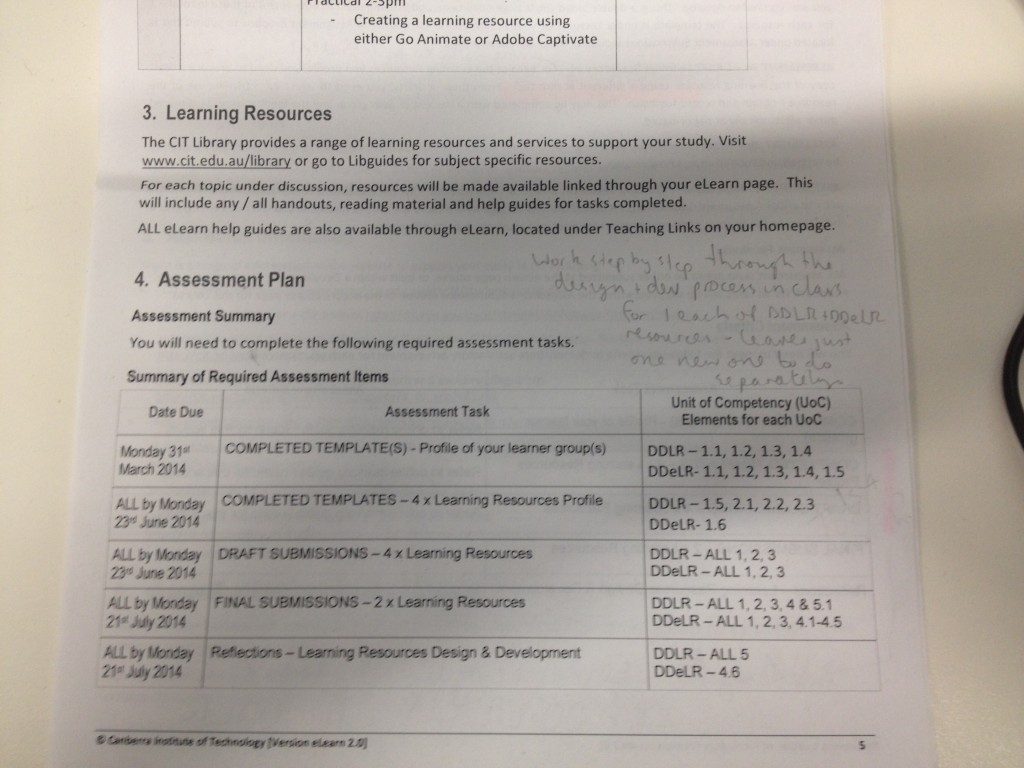

These are the documents from these two assignments.

Lecture Response systemsPitch ClickersEdTechEvaluationRubric EducationalTechnologyNeedsSurvey ColinEducation Technology Checklist2